I'm a fan of infrastructure-level (polyglot) service meshes. The premise excites me as much as Android ten years ago. Working over those years with SOA, EDA, REST Hypermedia API's, API Management, Microservices and language-bound Services Meshes (Netflix / Pivotal, Lightbend's Reactive), I tend to be careful about the resolution of their promises though.

This post is about 3 lesser known effects of service meshes. My last post already covered complexity, emergence and observability in a more general way so I'll limit those topics.

Reasoning

Horizontal scalability in the multi-core age is one of the main arguments behind basically all modern stateless software architectures. Consistency in distributed systems, immutability and state-handling are often mentioned common properties, typically used to justify functional programming paradigms. To me it seems though, their most important common property is a complex, emergent network graph structure. Layers and tiers cannot represent contemporary systems anymore. Those systems have a complex adaptive graph structure in space (infrastructure, users, component interactions) and time (versioning / one codebase, rainbow releases, experiments, DevOps, event order, routing).

We could observe a lot of frameworks in the last years quietly move towards declarative graph alterations. The first I remember were Android Intents and Puppet. But more recently it was React, Tensorflow, Beam, Kubernetes, Eve (RIP) not to forget the re-emergence of SQL combined with flexible consistency models and stream processing.

All of those come with a robust, well-defined domain vocabulary and set of patterns that allows to precisely define desired behaviour. A graph encourages modularization and reuse, it allows for division of labor: Better specialization while making the overall concept better understandable. This, in turn, allows a wider, more diverse group of people to reason and converse about the behaviour of the system. The shared language and culture may hopefully enable them to learn alongside the system, what Nora Bateson calls "symmathesy". It requires all actors in the system to define goals and dependencies, versioned together in one codebase across layers and components, documentation, test (spec), customer support feedback and architectural decisions. That's why all good (micro) service-architecture principles contain continuous delivery and lean.

There is no single continuous integration and delivery setup that will work for everyone. You are essentially trying to automate your company's culture using bash scripts.— Kelsey Hightower (@kelseyhightower) November 20, 2017

The biggest difference between those graph-based declarative approaches and Model-Driven concepts (MDD/MDA) is that they are bottom-up, and designed to support evolution*. Instead of requiring a canonical model, tribal ("bounded") domain language or strict interface contracts externally, it is very easy to implement domain-event messaging on the infrastructure level, because the infrastructure itself has meaning, allowing for independently composed distributed systems - in other words choreographed rather than orchestrated.

The declarative, domain-event-driven approach shares some advantage of MDA though: The vocabulary, patterns, and visible graph of dependencies. It makes it a lot easier to follow, though, and a lot harder to ignore. Once the implications of changes to the graphs are commonly understood, it's a lot easier to reason about the graph (see, for instance, the original Flume paper). On the low level that service meshes target, the infrastructure level, this quality makes it a lot easier to reason and iteratively learn the entire system (including the mesh itself and the DevOps process around), and to version, document, track and test the system.

Convergence

Obviously missing from the list of declarative approaches above was Maven. Dependency Management has been an under-appreciated enabler for evolutionary architectures. Imho RPM, Maven (including Android), NPM, GitHub (GitOps) and Docker made platform-agnostic isolated components possible. Maybe it's just nostalgia having wrestled each of those, learning the standard lifecycles, dependency graphs (and hell) and application composition along the way: But I do like to think those brought the internet, the complex, over time emerging network graph, into software engineering (as close as we can get to a "Software Internet" in the words of Alan Kay or, in other words, the "Datacenter as a Computer") - and still pushes for innovations. Imagine route-based code splitting used for serverless scaling.

For instance, the Istio service mesh has a lot of architectural, but also release management and DevOps principles baked in - and it allows to visualize this as graphs. Istio follows the Kubernetes (aka Unix aka TCP) philosophy of flexibility and robustness by being able to run in concert with Consul, Eureka, Mesos DC/OS and others. The principles and idea behind Istio and Kubernetes translate across platforms, therefore the application styles converge. API's for mobile apps can be defined in universally acceptable ways, and concept like streaming can be used on both client- and server-side yet having a robust, secure and efficient platform. That's what cloud native really means. It means mashups between service components as they share the same ideas.

With API's also turning into declarative graphs (REST evangelists would add the word "finally"), it's only a matter of time until service meshes will unify call- with event- and function-semantics, and make consistency and synchronisation a property (as in gRPC). I am not sure whether serverless Kubernetes is the future as Brendan or Simon say, as functions themselves can be just as iterative and incomprehensible. But assuming this starting point, hopefully with a bit more transparency, it's possible serverless systems would pick up data from process mining and reroute service requests or events to the best backend just like traffic directions do today - based on probabilistic distributions and quality guarantees (and trade-offs, see below) the engineers define.

Approachability

Declaration is Documentation. As mentioned above, services meshes are a great tool to describe not only the requirements but also the expected and actual behaviour of the system. This makes the system approachable by non-engineers, it allows every stakeholder, from usability designer to product manager to customer support specialist to understand system primitives, their trade-offs and express their requirements in the same terms.

One problem with distributed, evolutionary mesh, as all graph-based frameworks are exactly those system primitives: The basic principles of the domain have to be universally accepted and therefore simple enough to be both learnable and approachable from the outside as well as powerful to describe complexity - without requiring a canonical model or increasing coordination cost (i.e. managers). You can think of it as essentially the same problem as orchestration vs choreography. Orchestration, i.e. implicit models, work better in the start of a project when someone has a clear idea what to do, they are faster to iterate. However, at some point the communication effort of translating that idea to a team eliminates the iteration efficiency. Smaller components help up to a certain point, but they still rely on the one engineer with this concept in her head. Declarative approaches, like choreography, require more learning in the beginning, but can scale horizontally based on a few core rules and consensus mechanisms.

It took me a long time to understand why SRE / CRE are so focused on measuring SLO, toil and error. Finally it clicked: In order to improve effects on risks, they have to be measurable (finely balanced with Goodhart's Law), as risk itself is just probabilities. In the end, architecture is risk management, and risk vs delight is something most designers, support teams, product managers, product owners, business or clients can work with (or at least has some defined biases to test for). There is enough metaphors in software engineering for everyone to have their favourite. Describing system behaviour as a hierarchy of interacting behaviours is something that can be translated into metaphors for almost every domain. Good metrics are not just figures, they are metaphors, a model, a map, that naturally favours simplicity over complexity. Approachable system boundaries also allow for easier planning (estimation, decision-making) and focusing types of work or automation.

Stakeholders can all define the confidence level and tune the behaviour the meet the requirements. The evolutionary* aspects of Agile, Lean, Design Thinking and DevOps are, in this sense, all variations of Box's loop. From this perspective, a SLO can be seen as a fitness function*, an objective assessment of an architectural concern (sometimes called the "ilities") and how to optimize it within the system (that is, including the team), for instance how to fix tech tebt. In other words, as I said in the talk: SRE is probably closer to the Architect role than most Architects were ever before.

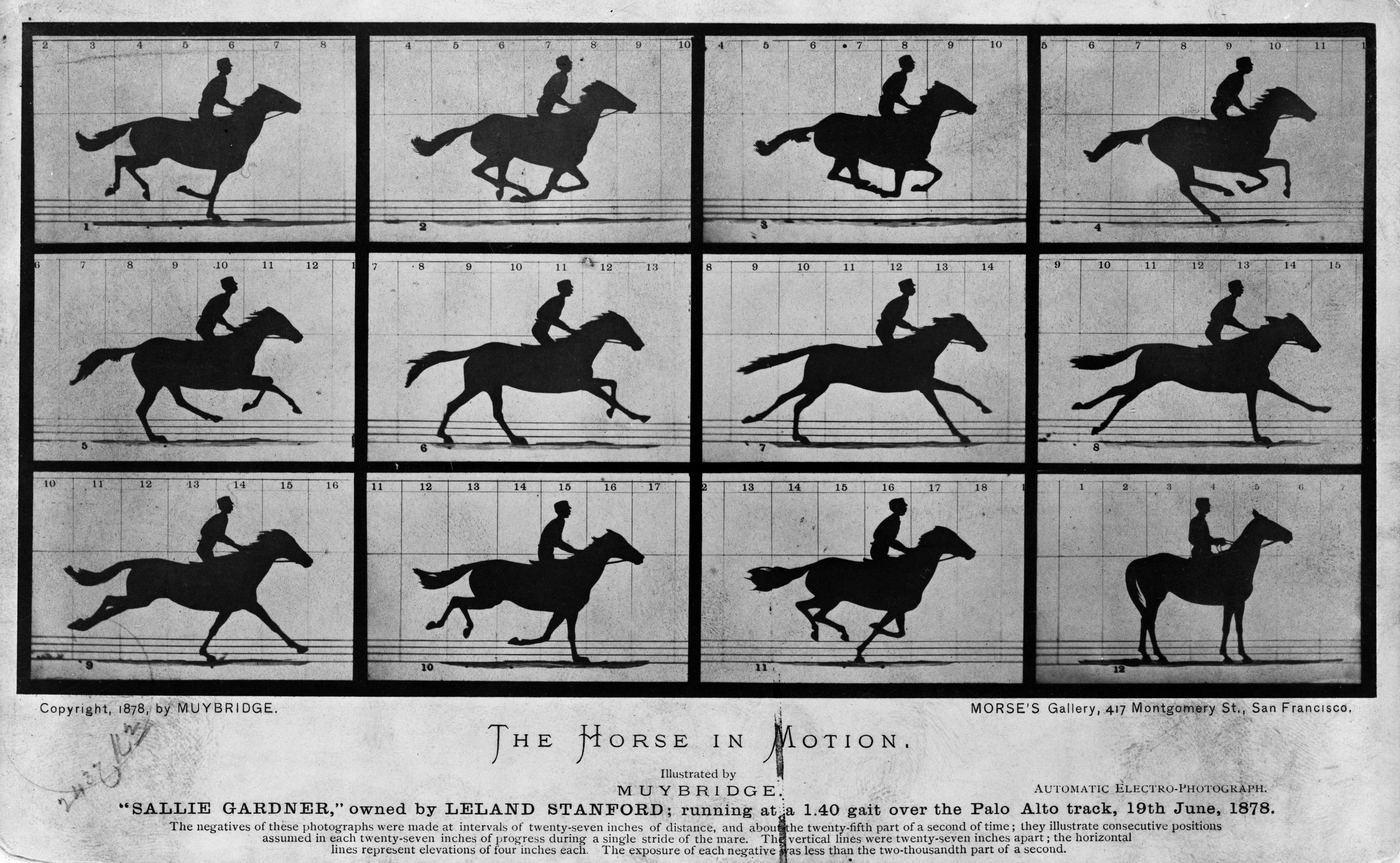

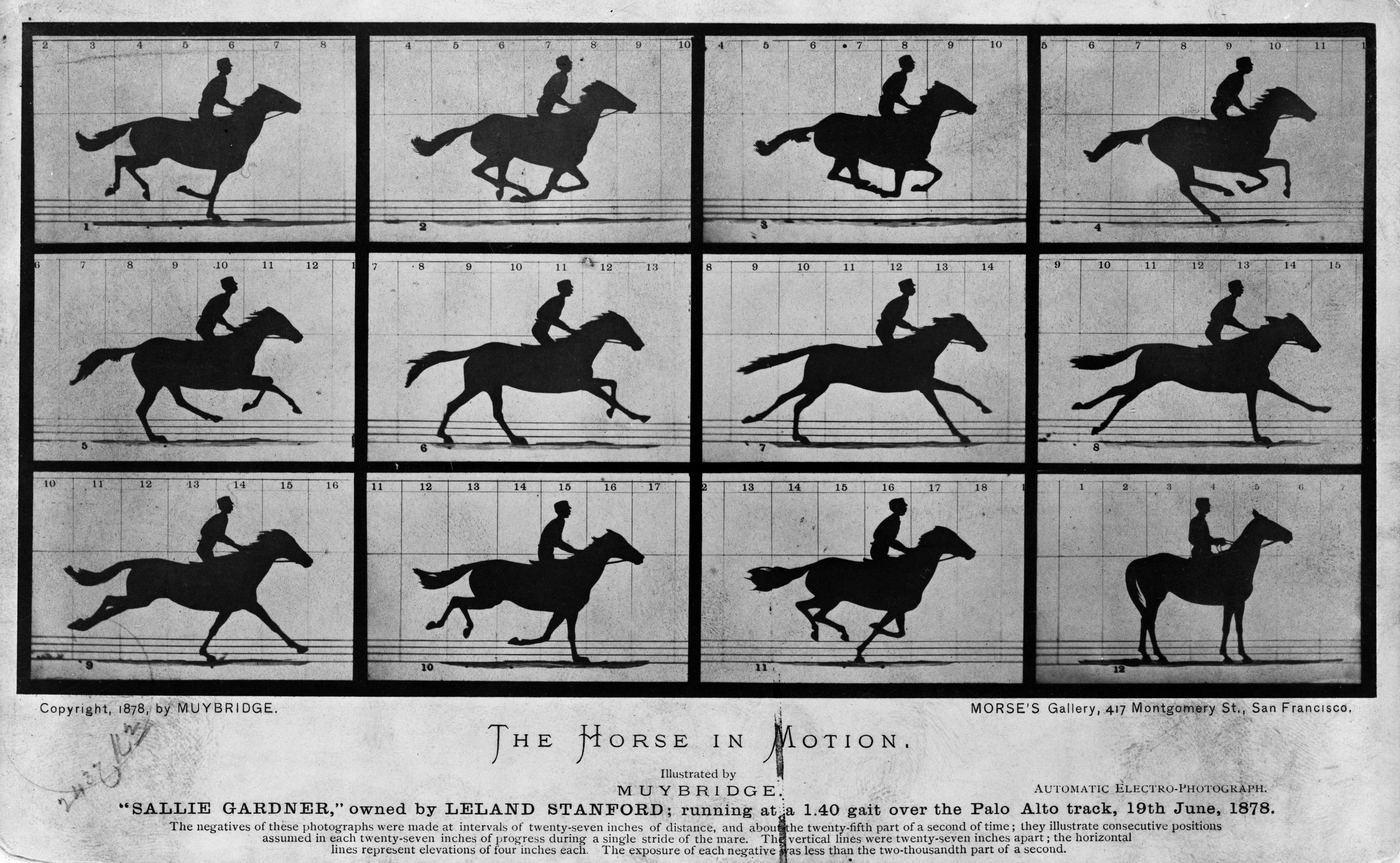

With measures against your model (the architecture), you get observability in time. Observability is to a software system what Muybridge's series was to the real world. It makes formerly incomprehensible behaviour visible, quantifiable and understandable. It helps the team to reason about the behaviour (see JBD), to compare mental models and concepts, to express and test risk as well as delight. Observability is one of the main tenets of Serviceability.

By Provided directly by Library of Congress Prints and Photographs Division, Public Domain, Link

Or, to count for the added dimensions it's similar to the psychogeography work of situationists like Guy Debord with his Dérive who set out to provide an objective, historical, both temporal and spatial view of the city by mapping it's movements. Thanks to this group our cities are finally becoming more liveable and include community-based planning.

Having those models, metaphors at hand, and being able to relate the metrics through introspection we can explain systems. We can use tools from machine learning, for instance explainability, do dig deeper it understanding surprising behaviour of evolutionary* systems. ML is already applied to a wide range of software engineering problems, from data center infrastructure to database indices and bundling JavaScript dependencies. There is no reason why we can't apply it to code itself, and to service connections and dependencies not in an AI sense, but in a tool sense to help us understand.

I wrote about the end of control earlier, but what excites me about these new, descriptive, observable mashups of systems is that they tackle the socio-technical problem. Maybe they will allow a psychogeography of our systems one day. And with that making software engineering not only more reasonable and scientific but more open, more communal and less tribal.

Stakeholders can all define the confidence level and tune the behaviour the meet the requirements. The evolutionary* aspects of Agile, Lean, Design Thinking and DevOps are, in this sense, all variations of Box's loop. From this perspective, a SLO can be seen as a fitness function*, an objective assessment of an architectural concern (sometimes called the "ilities") and how to optimize it within the system (that is, including the team), for instance how to fix tech tebt. In other words, as I said in the talk: SRE is probably closer to the Architect role than most Architects were ever before.

With measures against your model (the architecture), you get observability in time. Observability is to a software system what Muybridge's series was to the real world. It makes formerly incomprehensible behaviour visible, quantifiable and understandable. It helps the team to reason about the behaviour (see JBD), to compare mental models and concepts, to express and test risk as well as delight. Observability is one of the main tenets of Serviceability.

By Provided directly by Library of Congress Prints and Photographs Division, Public Domain, Link

Or, to count for the added dimensions it's similar to the psychogeography work of situationists like Guy Debord with his Dérive who set out to provide an objective, historical, both temporal and spatial view of the city by mapping it's movements. Thanks to this group our cities are finally becoming more liveable and include community-based planning.

Making the city observable implies allowing the city to become an environment for learningThis quote from Richard Saul Wurman's to make "the city observable" comes from M. Steenson's "Architectural Intelligence" p. 92 who also has a great quote on how patterns rearrange power on p. 59. Without stretching the urbanism metaphor too much, I do like the connection between observability and learning here - and in Steenson's book. It highlights why we need approachable systems that allow reasoning about them - because that's the major pre-requirement for any kind of real iterative development able to deal with complex emerging features, not just a type of external power defining a fitness for an evolutionary architecture.

Debugging is an iterative process, involving iterative introspection of the various observations and facts reported by the system [...] Evidence needs to be reported by the systems in the form of highly precise and contextual facts and observationsLearning is what Cindy Sridharan in Monitoring and Observability quoted above calls introspection. Introspection is more than just iterative or feedback in evolution, because it includes both reason and empathy. Introspection is the philosophy of serviceability. We can apply Actor-Network-Theory and promise theory to describe the characteristics not only in individual SLA's, but in consistency terms, all the way up to user expectations and to the analysis of unexpected failures.

Having those models, metaphors at hand, and being able to relate the metrics through introspection we can explain systems. We can use tools from machine learning, for instance explainability, do dig deeper it understanding surprising behaviour of evolutionary* systems. ML is already applied to a wide range of software engineering problems, from data center infrastructure to database indices and bundling JavaScript dependencies. There is no reason why we can't apply it to code itself, and to service connections and dependencies not in an AI sense, but in a tool sense to help us understand.

I wrote about the end of control earlier, but what excites me about these new, descriptive, observable mashups of systems is that they tackle the socio-technical problem. Maybe they will allow a psychogeography of our systems one day. And with that making software engineering not only more reasonable and scientific but more open, more communal and less tribal.

*) Using Evolution here mostly in the currently prevailing sense of development as popularized in the book. However, I am personally more learning towards an Emergence model which allows more radical changes and smells less like Naturalism. Brian Arthur would call the first "Evolution in the narrow sense" whereas I prefer "Evolution in the full sense" of the system as a whole being interconnected in its fate.

No comments:

Post a Comment